Installing COTS applications using Azure Gallery VM

When having the need to install COTS applications (Custom Off-The-Shelf) on virtual machines in Azure, you have multiple options to manage that. One of the options is to use the Azure Gallery VM Applications. As the name implies, its part of Azure Compute Gallery, formally known as Azure Shared Image Gallery. Azure Gallery VM Applications is a feature that simplifies the process of managing and deploying software packages on Virtual Machines (VMs) at scale. It’s especially useful in scenarios where you need to maintain specific applications, configurations, or tools across multiple VMs within a virtual machine scale set (VMSS) or individual VMs in Azure. It allows you to centrally manage applications and their versions, making it easier to ensure consistency across VM environments.

In this article, we will walk through the process of installing COTS applications using Azure Gallery VM Applications. We will use an example of installing a custom application on a Windows VM. The same process can be applied to Linux VMs as well.

We need to follow the below steps to install COTS applications using Azure Gallery VM Applications:

- Create the Application: First, you create a VM application in the Azure Compute Gallery. This is where you store the common metadata for all the versions under it.

- Define Versions: Each application has versions, similar to VM image versions, and you can select the required version for deployment.

- Assign to VMs or VMSS: You can assign the created application to individual VMs or a VMSS. When deployed, the VM or VMSS instances automatically pick up and install the specified application version.

Prerequisites

Before we can start creating the first application definition, we first need to create Azure Compute Gallery. If you haven’t created it yet, you can use the following Terraform code to create it.

resource "azurerm_resource_group" "gallery-resource-group" {

name = "rg-${var.workload}-gallery-${var.environment}-${var.location_short}-001"

location = var.location

tags = var.tags

}

resource "azurerm_shared_image_gallery" "gallery" {

name = "gal${var.workload}${var.environment}${var.location_short}001"

resource_group_name = azurerm_resource_group.gallery-resource-group.name

location = var.location

description = "Shared image gallery for Virtual Machine applications"

tags = var.tags

}

Besides the Compute Gallery, we also need a storage account. The installers of the COTS we’re going to install need to be stored in a storage account. We can use the following Terraform code to create a storage account.

resource "azurerm_storage_account" "installer_storage" {

name = "stginstallerdemo"

resource_group_name = azurerm_resource_group.gallery-resource-group.name

location = var.location

account_tier = "Standard"

account_replication_type = "LRS"

}

resource "azurerm_storage_container" "installer_container" {

name = "installers"

storage_account_name = azurerm_storage_account.installer_storage.name

container_access_type = "private"

}

To make sure the installers are only accessible for a limited time, we can create a SAS token for the storage account. The following Terraform code creates a SAS token that is valid for one day.

data "azurerm_storage_account_blob_container_sas" "sas_token" {

connection_string = azurerm_storage_account.installer_storage.primary_connection_string

container_name = "installers"

https_only = true

start = time_static.today.rfc3339

expiry = time_offset.tomorrow.rfc3339

permissions {

read = true

add = false

create = false

write = false

delete = false

list = true

}

}

With that in place, we can start creating the application definition.

Create the Application

Creating an application consists of two main steps: defining the application and defining the application version. The application definition can be created using the following Terraform code. In this blog, we’re going to install Java on the VMs so lets call it ‘Java’.

resource "azurerm_gallery_application" "java" {

name = "java"

description = "java Installer"

gallery_id = azurerm_shared_image_gallery.gallery.id

location = var.java.location

supported_os_type = var.java.supported_os_type

tags = var.tags

}

Next, we need to define the application version. When doing that, we need to point it to the installer file. We therefor first need to upload that to our storage container. Then we can create the version. The following Terraform code first uploads the file and then creates the application version for Java.

resource "azurerm_storage_blob" "installer_blob" {

name = "jdk-23_windows-x64_bin.msi"

storage_account_name = azurerm_storage_account.installer_storage.name

storage_container_name = azurerm_storage_container.installer_container.name

type = "Block"

source = "installers/jdk-23_windows-x64_bin.msi"

}

resource "azurerm_gallery_application_version" "java23" {

name = "23.0.0"

gallery_application_id = azurerm_gallery_application.java.id

location = var.java.location

package_file = "jdk-23_windows-x64_bin.msi"

tags = var.tags

manage_action {

install = "rename java23 jdk-23_windows-x64_bin.msi && cmd jdk-23_windows-x64_bin.msi /i /qn"

remove = "todo"

}

source {

media_link = "https://stginstallerdemo.blob.core.windows.net/installers/jdk-23_windows-x64_bin.msi?${data.azurerm_storage_account_blob_container_sas.sas_token.sas}"

}

dynamic "target_region" {

for_each = var.java.target_regions

content {

exclude_from_latest = target_region.value.exclude_from_latest

name = target_region.value.location

regional_replica_count = target_region.value.regional_replica_count

storage_account_type = target_region.value.storage_account_type

}

}

}

In the above code, we first upload the installer file to the storage account. Then we create the application version. The manage_action block contains the commands to install and remove the application. In this case, we’re installing Java silently. Mind the fact that, in that command, we first rename the file. That is because the file is named after the applications definition name, not the original file name. The source block contains the link to the installer file. We’re using the SAS token to make sure the file is only accessible for a limited time. The target_region block contains the regions where the application version is available. The Azure Compute Gallery will automatically replicate the installer to the specified regions.

Assign to VMs

Now that we have the application and the version, we can assign it to a VM. The following Terraform code assigns the Java application to a VM.

resource "azurerm_virtual_machine_gallery_application_assignment" "example" {

gallery_application_version_id = azurerm_gallery_application_version.java23.id

virtual_machine_id = azurerm_windows_virtual_machine.gallery_vm.id

}

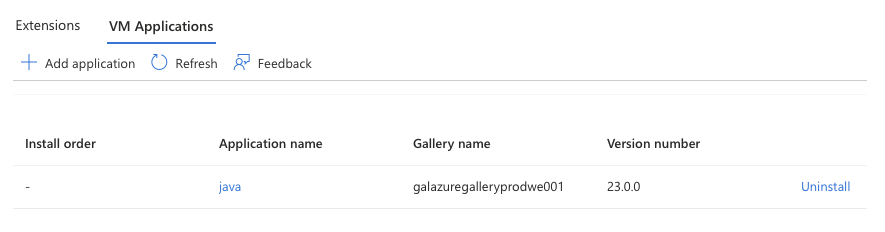

At the end of this blog, you will find the Terraform code to create a test (Windows) VM. When you deploy the code, the application will be installed on the VM. You can check the installation status in the Azure portal. Go to the VM, click on the Extensions + Applicatiions menu, and then click on the VM applications. There you will see the status of the installation as shown below.

Code to crate a test VM

resource "azurerm_virtual_network" "gallery_vnet" {

name = "gallery-vnet"

resource_group_name = azurerm_resource_group.gallery-resource-group.name

location = azurerm_resource_group.gallery-resource-group.location

address_space = ["10.0.0.0/16"]

}

resource "azurerm_subnet" "gallery_subnet" {

name = "gallery-subnet"

resource_group_name = azurerm_resource_group.gallery-resource-group.name

virtual_network_name = azurerm_virtual_network.gallery_vnet.name

address_prefixes = ["10.0.0.0/24"]

}

// create the nic for the Windows VM

resource "azurerm_network_interface" "gallery_nic" {

name = "gallery-nic"

resource_group_name = azurerm_resource_group.gallery-resource-group.name

location = azurerm_resource_group.gallery-resource-group.location

ip_configuration {

name = "gallery-ipconfig"

subnet_id = azurerm_subnet.gallery_subnet.id

private_ip_address_allocation = "Dynamic"

}

}

// create a Windows VM that uses a public image and with remote access using Bastion

resource "azurerm_windows_virtual_machine" "gallery_vm" {

name = "gallery-vm"

resource_group_name = azurerm_resource_group.gallery-resource-group.name

location = azurerm_resource_group.gallery-resource-group.location

size = "Standard_DS1_v2"

admin_username = "adminuser"

admin_password = "Password1234!"

network_interface_ids = [azurerm_network_interface.gallery_nic.id]

os_disk {

caching = "ReadWrite"

storage_account_type = "Standard_LRS"

}

source_image_reference {

publisher = "MicrosoftWindowsServer"

offer = "WindowsServer"

sku = "2019-Datacenter"

version = "latest"

}

provision_vm_agent = true

depends_on = [azurerm_network_interface.gallery_nic]

}